The Hidden Cost of Ungoverned AI in Sales

Nov 7, 2025

Generative AI has entered the enterprise sales ecosystem with astonishing speed. Every sales organization now experiments with AI to write emails, predict deal outcomes, or generate buyer insights. But as the tools multiply, so do the risks. Across industries, organizations are rushing to deploy AI faster than they can govern it. The result is a growing gap between what AI can do and what companies can control. In sales, that gap is turning into a costly liability.

Forrester recently warned that B2B companies could lose more than ten billion dollars in enterprise value because of the ungoverned adoption of generative AI across marketing, sales, and product. These losses are not the result of failed models, but failed governance, i.e., when data, models, and human judgment are disconnected.

Ungoverned AI is quietly eroding value through misinformation, compliance violations, and declining buyer trust. The irony is that the same technology designed to improve sales efficiency can, when unmanaged, undermine the integrity of the entire revenue process.

The solution is not to slow down AI adoption, but to build it on stronger ground. Responsible governance, embedded within every sales workflow, can transform AI from a liability into a lever for trust, transparency, and long-term growth.

What Ungoverned AI Looks Like in Sales

In practice, ungoverned AI describes any use of generative AI that lacks oversight, data lineage, validation, or accountability. It is what happens when technology moves faster than process.

A few examples illustrate how common this already is:

Shadow AI tools in sales: Representatives use public large language models to draft prospect emails or proposals without security controls or brand checks. The content looks polished but may include inaccuracies or expose confidential data.

Automated proposal generation: Generative engines create sales quotes or contracts with nonstandard terms that violate internal pricing policies or regional compliance laws.

AI-driven deal forecasts: Models trained on incomplete or biased CRM data produce inaccurate revenue projections, which cascade into poor planning and missed targets.

Unverified chat assistants: Buyer-facing bots answer pricing or capability questions using open data sources, creating a risk of misinformation and potential legal claims.

These are not rare edge cases. They are daily realities in sales teams experimenting with generative AI without formal guardrails. The immediate result may be efficiency, but the downstream effect is misalignment, risk exposure, and erosion of trust.

The Real Cost of Losing Control

When AI operates without governance, the risk is not just technical failure but organizational fallout.

In 2023, Deloitte faced public scrutiny after an AI-generated report for the Australian government was found to contain factual errors and misleading claims. The result was immediate: contracts were paused, credibility was questioned, and trust eroded.

In the United States, state bar associations are investigating whether AI-generated legal briefs misstate case law or misattribute sources. Universities, too, have retracted academic papers after discovering that authors used AI tools to fabricate or misquote references, undermining confidence in research integrity.

These incidents show how ungoverned AI can turn small lapses into major consequences with the impact compounding quickly across financial, legal, and reputational dimensions.

1. Legal and regulatory exposure

A single AI-generated sales proposal that includes incorrect regulatory language or false claims can trigger legal settlements or fines. In industries like healthcare or financial services, the cost of noncompliance can exceed millions per incident.

2. Brand and buyer trust erosion

If a prospect receives misleading information from an AI-powered chatbot, the loss is not just the deal; it is confidence in the brand. Forrester reports that nearly one in five B2B buyers now expresses lower trust when interacting with AI-driven sales tools. Once trust declines, win rates and renewal rates decline with it.

3. Enterprise value erosion

As AI-driven missteps reach the market, such as data breaches, compliance lapses, or reputation hits, investors respond. Public companies that suffer governance failures often see immediate stock volatility and long-term brand damage. Poorly governed AI can therefore create a real, measurable drag on enterprise value.

4. Productivity illusion

Ungoverned AI can create a false sense of efficiency. It generates faster outputs but without verifying accuracy or alignment. Sales teams may mistake speed for improvement while quietly introducing errors that require costly human correction later.

Why the Top Down Model of AI Governance Fails

Governance isn’t just about having a policy document in a drawer. It involves frameworks, workflows, human oversight, role clarity, data standards, validation and traceability across AI models. Key components of governance practices include:

Clear policies and procedures for how AI tools are selected, validated, deployed and retired.

Defined roles and responsibilities across central risk/compliance teams and business units.

Robust data management (quality, lineage, cataloguing) and auditability of model outputs.

Human-in-the-loop controls and continuous monitoring of model performance and business impact.

Democratized governance: empowering sales, marketing, product teams with literacy and frameworks rather than relying solely on central silos

Most enterprises already have AI policies. The problem is how those policies are applied. The traditional top-down approach, where central compliance or IT teams define rules and business units are expected to follow, no longer works.

In a sales context, top-down governance often results in disconnected policies that block productivity without enabling responsible use. The leadership might prohibit external GPT tools entirely, but sales teams, under pressure to hit quotas, still use them informally. Risk multiplies not because of negligence, but because governance is detached from reality.

The other flaw in the top-down model is speed. AI evolves daily, while governance cycles move quarterly. Policies lag behind usage, and business teams innovate faster than they are trained. In the absence of adaptive governance, enforcement becomes reactive rather than preventive.

True AI governance cannot be enforced by command. It must be embedded by design.

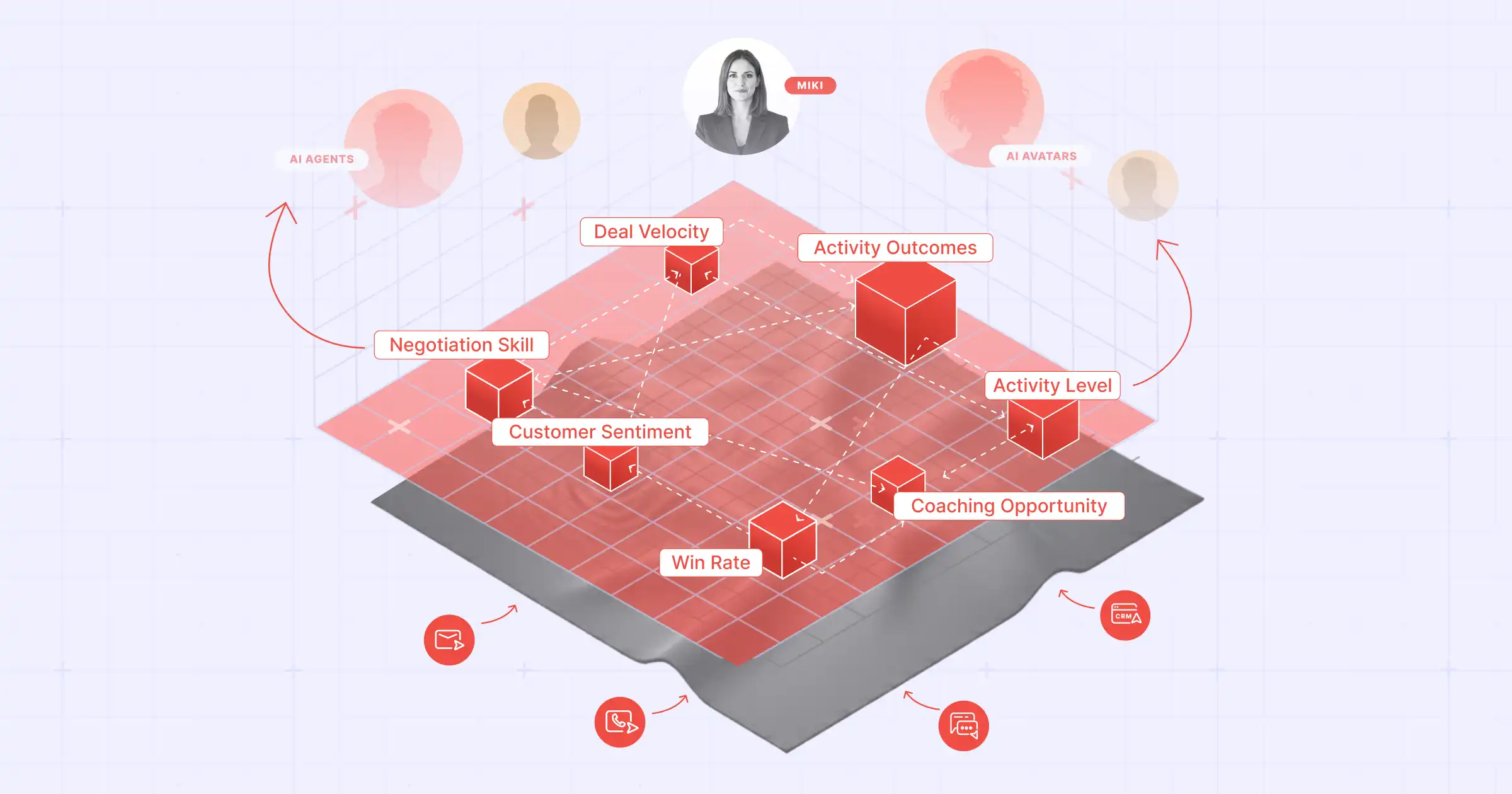

The Alternative: Embedded Governance and the AI Intelligence Quotient

A more effective governance model balances control with empowerment. Governance should not sit in isolation as a compliance layer; it must be embedded within the daily workflows, tools, and data systems that sales teams already use. Instead of issuing restrictions, organizations need to design architectures that make responsible AI use the natural default.

In this approach, central teams define standards and frameworks, while business units are trained and equipped to govern their own AI use. They operate within clear workflows, automated monitoring, measurable metrics, and continuous feedback loops.

At the core of this model is a higher AI Intelligence Quotient (AI IQ)—the collective capability of the organization to understand how AI systems function, recognize when they may fail, and interpret their outputs responsibly. When sales teams possess a high AI IQ, governance becomes not a barrier to innovation but a foundation for trusted, autonomous execution.

A high AI IQ sales organization knows:

when to trust AI-generated insights and when to challenge them

how to verify data sources before acting on recommendations

how to flag anomalies or bias

and how to trace decisions back to their AI origin

When sales teams possess that literacy, governance stops being a bottleneck and becomes a competitive advantage. The goal is not to slow down innovation, but to scale it safely.

Building a Responsible AI Framework for Sales

Every sales organization can take three steps to move from ungoverned experimentation to responsible, value-creating AI adoption.

Step 1: Establish traceability and guardrails

Begin with visibility. Catalogue every AI tool used across your sales stack, including email generators, deal predictors, CRM copilots, and chatbots. Identify what data they access, what outputs they produce, and who reviews them. Create audit trails that log AI outputs and decisions.

Have AI guardrails in place to define acceptable use boundaries: what data AI can access, which prompts are restricted, and what review processes must be followed before outputs are shared with customers or integrated into systems. Guardrails serve as the first line of defense against data misuse, hallucinations, and compliance breaches.

Step 2: Define human-in-the-loop controls

Automation should never replace accountability. Introduce checkpoints where humans validate AI-generated proposals, content, or forecasts before they reach customers or financial systems. Equip managers to review not only outcomes but also the decision paths AI takes.

Step 3: Monitor outcomes, not just policies

Governance must be tied to measurable results. Track how AI influences win rates, forecast accuracy, buyer satisfaction, and compliance incidents. Use these metrics to refine both tools and policies continuously.

These steps transform governance from static compliance into dynamic assurance, making AI safer, smarter, and more aligned with business outcomes.

How Aviso Ensures Governed AI in Sales

At Aviso, responsible AI is not an add-on. It is built into how our platform operates. Every capability follows strict governance principles that make AI explainable, compliant, and auditable at every step.

1. Built-in Guardrails

From data input to AI output, Aviso applies defined guardrails that control what data is accessed, how it is used, and where it is stored. Sensitive CRM and revenue data stay within secure enterprise boundaries, never exposed to public or unverified sources.

2. Human-Approved Autonomy

Aviso’s AI acts with structured autonomy. It analyzes and recommends, but human users review and approve all critical decisions. This ensures automation supports human judgment rather than replaces it.

3. Unified Data Governance

All data within Aviso is unified, traceable, and auditable. Data lineage is fully visible, preventing the fragmented flows that often cause inaccuracies or compliance issues in other AI systems.

4. Enterprise-Grade Security and Compliance

Aviso meets global enterprise standards for security and privacy, including GDPR, SOC 2, and ISO 27001. Encryption, data residency, and access controls are enforced across the platform to ensure full protection of customer data.

5. Continuous Monitoring and Responsible Learning

AI models are continuously monitored for bias, drift, and accuracy. Feedback loops from users are reviewed to keep outputs transparent, consistent, and aligned with governance standards.

By embedding governance and compliance into every layer of AI execution, Aviso enables enterprises to use AI safely, protect revenue, and avoid the risks associated with ungoverned use of AI.

Sustainable Growth Begins with Governed AI

Ungoverned AI in sales is not just a compliance issue. It is a strategic blind spot. It quietly drains trust, distorts data, and corrodes enterprise value from within. Yet the same forces that make AI risky, such as its speed, reach, and autonomy, also make it transformative when governed correctly.

Sales organizations that get this right will not just avoid fines or public crises. They will build trust capital with buyers, attract investor confidence, and unlock the full economic potential of generative AI.

The difference between those outcomes is not technology. It is governance.

And governance, when done right, is not the brake on AI. It is the steering wheel.

At Aviso, we help enterprises operationalize this vision, bringing explainable, auditable, and AI-first governance to every stage of the revenue process.