How Vector Indexing Powers Fast, Accurate Insights at Aviso

Oct 1, 2025

The use of Generative AI and Large Language Models (LLMs) is expanding rapidly. These systems can create realistic text, images, audio, and video, opening up entirely new ways for businesses to work. Companies are embedding GenAI into everyday workflows from AI Agents and intelligent copilots to smarter search experiences to tools that help employees move faster and make better decisions.

But for GenAI to be truly useful in business contexts, it needs more than raw generation power. It needs context and memory. Whether it is a sales leader asking an AI assistant for a competitive playbook, or a customer success manager pulling up a relevant demo video, the expectation is simple: ask a question, and get the best answer instantly.

That is easier said than done. Sales organizations generate mountains of unstructured data every day, including call recordings, meeting transcripts, CRM notes, email threads, product demo videos, and more. Finding the right information in this flood of content is nearly impossible without the right structure.

This is where vector indexing comes in. It ensures GenAI systems can retrieve the most relevant information with both speed and accuracy. Instead of forcing an AI to scan through an entire warehouse of data, vector indexing acts like a smart shelving system that guides the system directly to the right section so it can surface the insights you need in milliseconds.

What Are Vector Embeddings?

Vector embeddings are a way to translate complex, unstructured data (like text, audio, video, or images) into a universal language that computers can understand: numbers or vector representation.

Consider these two sales call snippets:

“We don’t have budget this quarter”

“Can you circle back next fiscal year?”

They may use different words, but they carry the same idea: a budget objection. When these snippets are converted into embeddings, their numeric vectors will be very close to each other in multi-dimensional space. Once data is converted into embeddings, it becomes searchable in a way that reflects meaning, not just keywords.

This makes embeddings powerful: instead of searching by exact words, systems can search by semantic similarity — finding content that means the same thing, even if it’s phrased differently.

What Is Vector Indexing?

A single company may generate millions of embeddings across calls, documents, and videos. Now that you have millions or even billions of these vector embeddings, you face a new problem: how do you search through them quickly?

Vector indexing is the solution. The purpose of a vector index is to search and retrieve data from a large set of vectors. It's like creating a highly efficient catalog or index for your library of vectors. An index is a special data structure that organizes the vectors in a way that dramatically speeds up the search process.

Why is this important to generative AI applications? Vector representations of data bring context to generative AI models. A vector index enables us to find the specific data we are looking for in large sets of vector representations easily. Instead of checking every vector, a search query can use the index to instantly narrow down the search to a small, highly relevant neighborhood of vectors (Approximate Nearest Neighbors or ANNs).

Types of Vector Indexing (Most Common Approaches)

When it comes to handling embeddings at scale, not all indexing methods are equal. Over the years, a handful of approaches have emerged as the “go-to” choices because they balance speed, accuracy, and scalability. Let’s break down the most commonly used ones in simple terms:

1. Flat (Brute Force)

A Flat index is the most basic approach. It doesn't have a complex structure; it simply compares your search query to every single vector in the entire database. Think of it as manually checking every book on every shelf in a library. While this method guarantees 100% accuracy, it becomes extremely slow and computationally expensive as your dataset grows, making it impractical for most real-world applications.

2. HNSW (Graph-Based Search)

HNSW is a powerful graph-based method. It organizes vectors as nodes in a network, connecting each point to its nearest neighbors. It builds multiple layers, like a map with highways, main roads, and local streets. A search starts on the "highway" layer to quickly find the general vicinity of the target, then zooms into the "local street" layers for a precise match. This approach is known for being extremely fast and highly accurate, making it one of the most popular choices for real-time applications.

3. Clustering (IVF)

Clustering works by grouping similar vectors together into “buckets.” When you search, instead of scanning everything, the system jumps straight into the most relevant bucket and only looks inside it. Imagine organizing all your sales collateral by topic — “Forecasting,” “Pipeline,” “Objections.” If you’re looking for something about forecasting, you ignore the “objections" folder entirely. This saves time and resources and dramatically speeds up the search compared to a flat index, offering a great balance between speed and accuracy. Though it can occasionally miss edge cases that don’t fit neatly into one group.

4. Compression (PQ, ScaNN, etc.)

Sometimes the challenge isn’t just speed; it’s memory. Compression methods shrink the size of stored vectors so you can keep far more of them without blowing up your storage. You lose a bit of detail in the process, but you gain the ability to search massive archives efficiently. Think of it like keeping summaries of old calls instead of full transcripts: you may not have every word, but you can still find the main ideas quickly. This is especially useful for historical or “cold storage” data.

Which Type of Indexing to Use and When

Indexing approaches are not one size fits all. The type of index that works best often depends on the nature of the application and its performance requirements. Broadly, these fall into three categories:

Real Time Applications: These require sub second responses where speed is critical, such as live chatbots, AI copilots, or recommendation engines.

Best fit: Graph based indexes such as HNSW are widely used here because they balance accuracy and speed even at very large scale. Dynamic indexing can also help by starting simple and automatically switching to faster structures as datasets grow.

Batch Processing Applications: In these cases, accuracy and completeness matter more than instant results. Examples include ingesting large document collections or preparing datasets for analytics.

Best fit: Flat indexes can be useful since they deliver exact matches and speed is less of a concern. Clustering based indexes combined with compression methods can also be used to handle very large datasets efficiently while maintaining high recall.

Hybrid Applications: Many workflows combine both needs. A system may rely on pre built indexes but also need to add new data in real time, such as when a user uploads fresh content that must be searchable right away.

Best fit: A mix of indexing methods works here. Graph based indexes can serve real time queries while clustering or compression based indexes manage historical or less frequently used data. Dynamic indexes can also adapt automatically depending on the data size and query pattern.

Business Implications and Use Cases

Vector indexing is not just a technical detail. It directly impacts how quickly and accurately generative AI systems can surface insights. Below are four common use cases where indexing makes a difference:

Document Retrieval: Businesses often need to search through large collections of documents such as knowledge bases, FAQs, or reports. Indexing makes it possible to scan large volumes of sales and enablement content instantly, ensuring that the right answer is always available when teams need it.

Transcript Search: Transcripts from meetings, webinars, or training sessions contain valuable insights but are often long and difficult to navigate. Indexing allows users to quickly locate specific parts of transcripts, such as when pricing or competition is discussed, without needing to replay entire recordings.

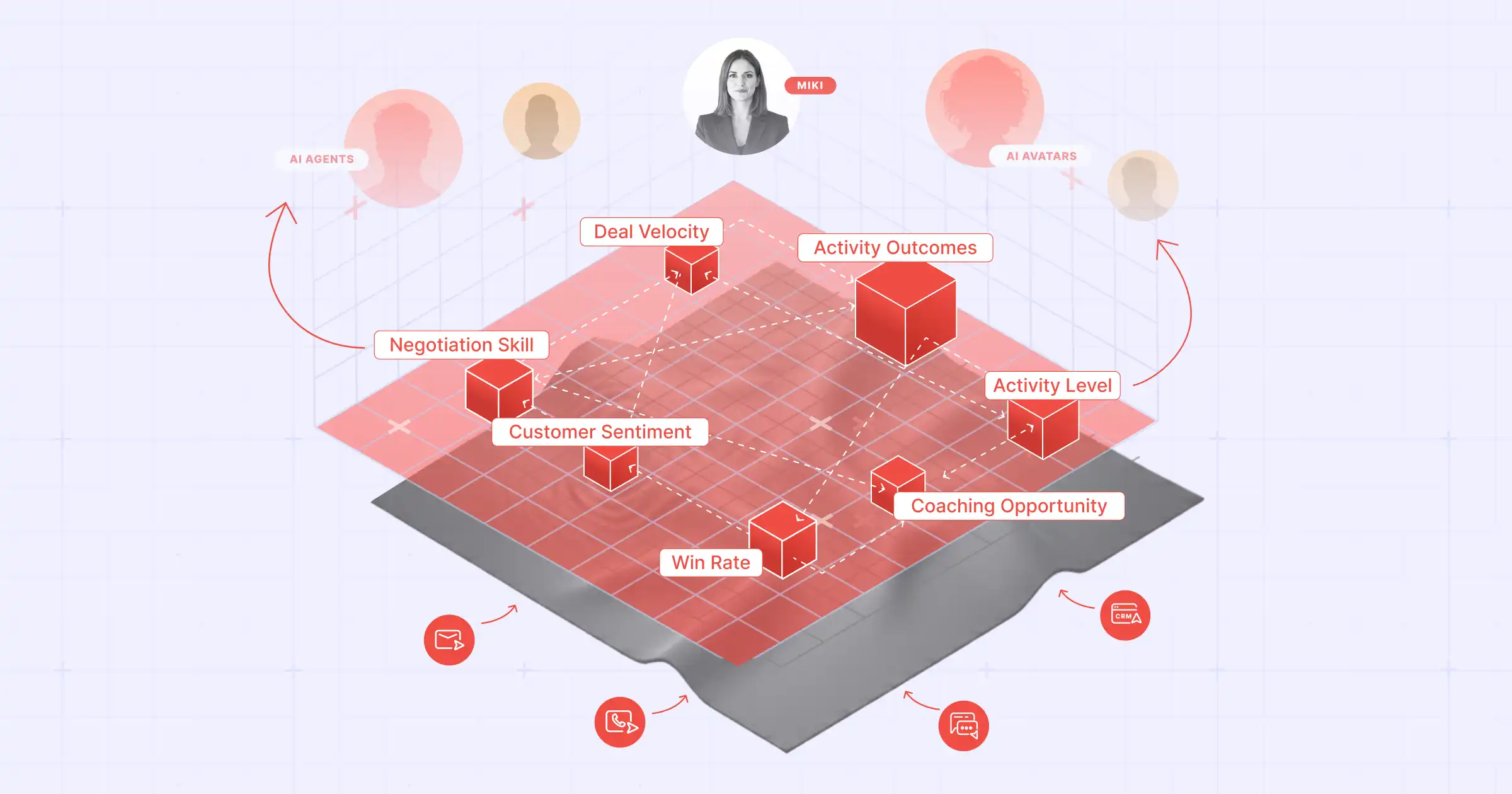

Video and Media Retrieval: With the growth of video based training and product demos, indexing is essential to surface the right media clips on demand. Without it, valuable content often goes unused. (See how Aviso’s AI Avatars use video indexing to recommend the most relevant demo or tutorial when a customer asks about a feature like forecasting or pipeline management.)

Low Latency AI Assistants: AI assistants that aim to answer questions in real time must minimize delays. Indexing helps pre organize data so answers are returned both quickly and accurately.

For instance, MIKI, Aviso’s Agentic AI Chief of Staff, uses indexing to cluster topics and reduce retrieval latency, ensuring users receive instant, context aware insights and recommendations without waiting.

Conclusion: Winning with the Right Indexing Strategy

Vector Indexing may sound like a backend optimization — but in reality, it’s the difference between an AI system that feels magical and one that feels laggy and frustrating.

For Aviso, indexing ensures that reps, managers, and customer success teams always get the fastest, most relevant answers across documents, calls, videos, and CRM data

The broader lesson for enterprises is simple: as your data grows, especially unstructured data, your AI platform is only as good as its indexing. Picking the right vector indexing strategy isn’t just a technical detail. It’s a business-critical choice.

Looking ahead, as datasets explode and GenAI becomes central to business operations, vector indexing will be the silent but decisive factor separating tools that deliver real productivity from those that remain novelties. At Aviso, we’re betting on indexing as the backbone of AI-driven sales excellence.

It’s why our AI can power real-time call analysis, intelligent AI Avatars, and AI Agents that power end-to-end GTM motions.